Artificial Intelligence : Agents

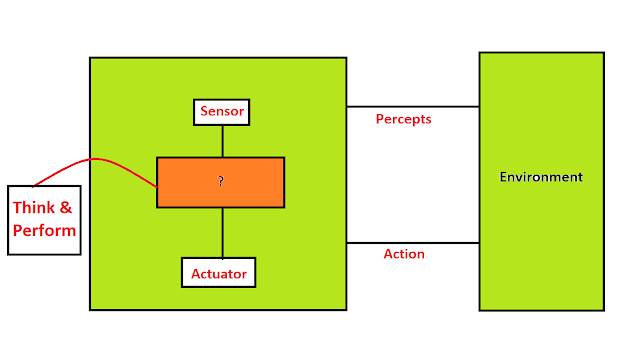

When we talk about Artificial Intelligence we need to talk about the way it interacts with the environment. Artificial Intelligence interacts with the environment through an agent. Now let's talk about agents.

What is an Agent?

According to me, an agent is anything that can be viewed as perceiving its environment through sensors and acting upon its environment through the actuator. Humans are also agents as they have eye, ear and other organs as sensors and hand, legs etc act as an actuator. Now, If we talk about robots then there are robotic agents. Now let's talk in deep about the robotic agent.Robotic agents

Sensors: Sensors and infrared rangefinder

Actuator: Various Motor

Robotic Agent on the basis of their behavior in the environment are classified as:-

1)Well Behaved Agent

2)Non-Well Behave Agent

Do you know about the well-behaved agent?

1)The performance measure that defines the criteria of success.

2)The agent prior knowledge to the environment.

3)The agent percept sequence to data.

"Agents are vulnerable to the different environment"

1)Fully observable

An agent's sensors give its complete access to complete state of the environment at each point in time2)Deterministic

The next state of the environment is determined by the current state of the action executed by the agent, also the environment is strategic.3)Episodic

The agent experience is divide into atomic episodes(each episode consist of the agent perceiving a single action)and the choice of action in each episode depends only on the episode itself.On the basis of Action taken by Agents in the environment they are classified as:

1)Model-Based Reflex Agent

Handle partial observability by keeping track of the part of the world it cants see now. Its internal state is depended on the percept history.Model of the world based on:-

1)How the agent action affects the world?

2)How the world evolves independently from the agent?

These type of agent uses a mapping from state to action.

2)Goal-Based Agent

Knowing the current state of the environment is not enough. The agent needs to know some goal information.Agent program combines the goal information with the environment model to choose the action that achieves the goal. These type of agents are flexible as their knowledge can be explicitly represented and can be modified according to their need. Example a student go to school wit moto of learning and getting good marks.

3)Utility-Based Agent

Sometimes achieving the desired goal is not enough we may have to look for safer, quicker, cheaper trip to reach the destinationAgent happiness should be taken into consideration we call it utility. A utility function is the agent performance. Because of the uncertainty in the world, a utility agent chases that action that maximizes the excepted utility.

Do you know programming agent by hand can be very tedious due to which we make agents that learn and perform by itself because coding every process to the agent impossible? Agent should always be itself critic.

Do you know about Mazes?

I think everyone had played these game in our kids but if we give the same game to AI how its approach will be to solve these game is quite interesting. Because here every path is needed to be searched that it will lead to the final state or not otherwise it is needed to be tried again. Search agent here is needed to explore the path from start to the goal.

Now Search Agent will solve these problems in 2 Stage process:

a)Goal Formulation

b)Problem Formulation

a)Goal Formulation

A goal is given to agent and agent need to set the goal for future works.

b)Problem Formulation:

1)Initial State:-The state in which the agent starts.

2)States:-All the states that are reachable from the initial state by any sequence of actions. (State Space). Here, State Space is set.

3)Actions:-Possible action i.e available to the agents. At a state S action(S) return the set of actions that can be executed in the state(S)

4)Transition Model: A description of what each action does

5)Goal Test:-Determine if given state is a goal state

6)Path Cost:-Function that assign a numeric cost to path w.r.t function

Now if a computer is given an 8 Queen Problem all the steps that it will use to solve it are as follow:-

State:-All arrangement of 0 to 8 queen on board

Initial State:-No queen on the board

Actions:-Add a queen to an empty square

Transition Model: Updated Model

Goal Test:-8 queens on the board with none attached

If you want to learn more about AI then give my previous blogs a read.

This blog is managed by:

For more such blogs and updates follow my facebook link:

Comments

Post a Comment